Agentic AI in Biopharma R&D: Lessons from Embedding Agents Inside Live Programs

Agentic AI Is Not a Tooling Upgrade — It’s an Operating Model Shift

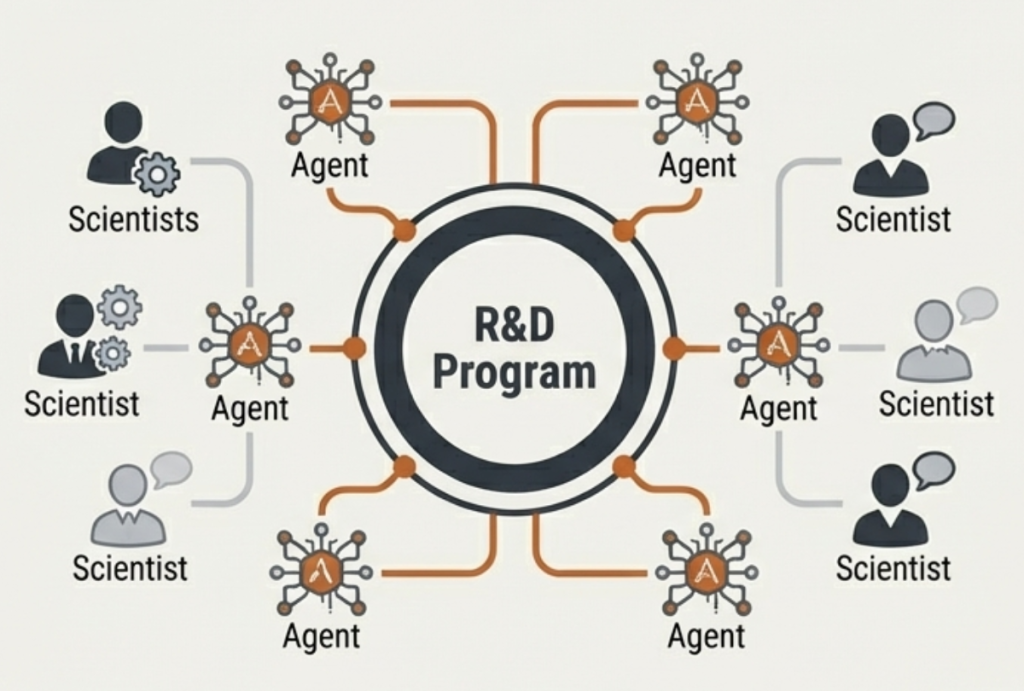

The real promise of Agentic AI lies in its ability to restructure how work in translational R&D is conducted: from intent to action, from evidence assembly to judgment, and from learning retention to application.

Agentic AI is often framed as an orchestration layer over tools or as a more autonomous co-pilot. In practice, neither framing survives contact with real biopharma R&D programs.

The hardest problems are not the literature search or the tool invocation. They are deciding which questions are worth answering, maintaining a clear line of sight between decisions that need to be made and the experiments that inform them, and operating effectively in the messy, iterative, high-stakes reality of translational R&D programs.

This post is a field report from our first year of applying Agentic AI to shift translational R&D toward a leaner, faster, and more effective operating model.

1. Why We Didn’t Wait for the Stack to Stabilize

The Agentic AI ecosystem is still evolving rapidly. Models improve, protocols emerge, platforms launch, and new claims appear almost weekly. It is tempting to wait for the stack to “settle.”

Early 2025, however, marked a genuine inflection point. Several threads that had been developing independently began to converge: post-training for reasoning in models, Model Context Protocol (MCP) for tool invocation, and Deep Research for sustained inquiry rather than single-turn assistance.

For the first time, Agentic AI moved from an aspirational concept to something we could responsibly deploy inside real translational R&D workflows.

We chose not to wait.

In early 2025, at PMWC, we publicly announced IgnivaTM, committing ourselves – deliberately and visibly – to Agentic AI as a core operating model for biopharma R&D. This was not a product launch in the traditional sense. It was a line in the sand: a decision to move beyond pilots and proofs-of-concept, and to embed agentic workflows across our internal programs and collaborations with global biopharma partners.

Biopharma continues to evolve as the technology matures. Programs move forward, decisions get made, and opportunities are either seized or missed. The most meaningful insights about Agentic AI emerge when agents are embedded in live programs that operate under real-world constraints, rather than in isolated or benchmarked environments.

As the year progressed, announcements such as Google’s Co-Scientist, Microsoft Discovery, and Claude for Life Sciences (with AbbVie sharing their AI adoption journey) reinforced a shared realization: scientific work demands agents that can reason across tools, data, and long time horizons.

By the time these announcements surfaced, we were already deploying agentic workflows inside real programs and learning from their failures as much as their successes. That hands-on exposure, not theoretical readiness, helped shape the platform.

The result is a continuously evolving architecture, guided by what actually breaks, scales, and delivers value under real-world conditions.

2. What Changed When Agents Entered Real Programs

“I have hundreds of AI experts in my team, but Igniva is the first platform that showed me how science will get done in the future.”

— Senior Executive at a leading Global Biopharma

The acceleration was unmistakable, but not for the reasons we initially expected.

Speed did not come primarily from faster computations or more efficient pipelines. It came from collapsing the distance between intent, execution, and interpretation.

2.1 Action at the speed of thought, reusing what’s already validated

Scientists could express goals at the level they naturally think – exploring a mechanism, testing a hypothesis, comparing alternatives – and have those intents translated into coordinated actions across tools, data sources, and compute environments. Execution happened on demand, and results came back not just as raw outputs, but with contextual interpretation.

Existing R&D systems – data platforms, analytical environments, laboratory systems, and workflow tools – became first-class participants in the operating model, with agents working across them to maintain continuity of context, decisions, and execution.

Another unexpected gain came from reuse. Once tools, models, and workflows were packaged into the agentic stack for one program, they became immediately discoverable and usable across others. Reuse no longer depended on tribal knowledge or documentation; it emerged naturally from interaction.

2.2 Bringing together multiple disciplines & perspectives

Perhaps the biggest shift was cultural. Agentic workflows surfaced inefficiencies and delays that had been normalized over years of manual coordination. Decisions that once took days of back-and-forth could be made in hours, not because humans were removed from the loop, but because the loop itself became tighter.

The biggest gains came from faster and better decisions than faster analysis.

Our programs tend to involve multiple rich scientific disciplines. Disease biology, Multi-omics, Structural biology, Medicinal chemistry, Translation science, Reaction & process engineering, CMC, Biosynthesis – you get the gist. There’s no easy way for any one scientist to be an expert in all of them, nor is it easy for team members from each of these disciplines to have instant/timely access to experts in the other disciplines for collaboration.

Our programs also demand the simultaneous application of multiple perspectives. Every decision must be weighed from Scientific, Translational, Regulatory, Commercial and Patient experience perspectives. To address this, at Aganitha, we’ve always trained our scientists in AI & Tech, our tech colleagues in Science, and everyone in the Business of Biopharma.By getting our science teams, data scientists, cloud engineers, and business analysts to author best-practice processes as IgnivaTM Agentic AI workflows, we are now able to scale our efforts a lot more easily.

2.3 Better alignment to translational objectives

For our biopharma partners, the biggest shift happened in how experimental work was framed and progressed.

Agentic workflows helped articulate which questions could and should be answered by experiments, guided the selection or design of appropriate model systems and assays, and coordinated the design and synthesis of biological entities or chemical species required for those experiments.

Agents supported the generation, review, and validation of protocols, informed reagent selection and plate layouts, and helped analyze and interpret results in context. Just as importantly, they assisted scientists in asking the right follow-on questions and proposing the next set of experiments, while integrating seamlessly with existing LIMS, ELNs, and laboratory systems rather than working around them.

3. Institutionalizing Scientific Judgment

Previous automation waves in R&D largely stopped at analysis pipelines. Data went in, results came out, and humans supplied the judgment in between.

That judgment, however, is where most of the real work happens.

Scientific decision-making involves locating and accessing the current state-of-the-art, assessing applicability, weighing trade-offs, validating assumptions, and synthesizing evidence from multiple imperfect sources. Much of this knowledge is tacit, context-dependent, and unevenly distributed across teams.

A central design principle for our agentic workflows was therefore not to automate analysis alone, but to institutionalize best-practice scientific judgment. This meant explicitly capturing:

- 1. When a method is appropriate (and when it is not)

- 2. How to assess the reliability and usefulness of results

- 3. How to combine multiple lines of evidence

- 4. How to recognize failure modes early

This required more than just “agent washing”, wrapping existing tools with agents. It required carefully capturing the qualifications, guardrails, and evaluation criteria that experts use implicitly, and continuously refining them as programs progressed.

For example, our agentic workflows codified expert judgment on available methods for:

- 1. Elucidation of targets in different families and functional roles

- 2. Identification and assessment of epitopes for antibodies

- 3. Selection of mutagenesis sites in biocatalysis with enzymes

making this reasoning consistent and reusable across programs.

The result is workflows that make expert reasoning more consistent, auditable, and scalable.

4. The Foundations Agents Depend On

In practice, we found that limitations of large language models were not the only bottlenecks.

What also constrained agentic workflows was the shape of the underlying data and the availability of relevant application APIs. Even the most capable models struggle when critical information is not in a processed form suitable for decisions to be made in R&D workflows.

Can data be shaped just-in-time with dynamically generated data pipelines? While code generation has indeed been a success story for Agentic AI, we are still far from the days when non-trivial data pipelines and applications are dynamically generated and executed, unattended or with minimal human supervision.

We learned early that meaningful Agentic AI in R&D requires deliberate investment in data foundations and application layers that are designed for reasoning, not just storage or retrieval.

This realization led us to build platforms such as DBTIPSTM, which curate and continuously update disease- and target-centric intelligence spanning scientific literature, multi-omics (e.g. population genomics and functional genomics) evidence, pathways, tractability assessments, clinical context, and market signals. Crucially, this information is exposed through consistent APIs that both agents and scientists can reason over.

The lesson was broader than any single platform. Agents can be hamstrung if the substrates they operate on are not fully ready. Without well-shaped data and dependable application interfaces, agentic systems quickly degrade into brittle code generation exercises.

With the right foundations in place, however, agents begin to function as collaborators rather than unreliable assistants, capable of synthesizing evidence across modalities and contexts with far greater robustness.

Why R&D Cannot Be Served With Generic Agent Platforms

Scientific R&D places demands on agents that differ fundamentally from typical enterprise workflows.

5.1 Scientific Visualizations

Scientific visualizations are not optional outputs; they are reasoning artifacts. Agents must be able to generate, interact with, and interpret visualizations, not just produce tables or text.

Once again, while dynamic code generation is necessary for plotting scientific visualizations, the risk of errors is high. Therefore, in IgnivaTM, every Agentic workflow is integrated with the tools needed to generate visualizations.

Current generation LLMs are already capable of taking multi-modal inputs and interpreting them, but most generic agent platforms do not leverage this capability. IgnivaTM does, providing scientists with not just the raw visualizations but also the interpretations needed to make informed decisions.

5.2 Long-running processes that can cost a fortune

Many scientific computations are long-running and expensive. Managing cloud and HPC resources, scheduling workloads, and controlling costs are not infrastructure concerns that can be abstracted away; they are integral to responsible scientific work.

In IgnivaTM, we addressed this using an Infrastructure-as-Code (IaC) approach for provisioning and tearing down cloud clusters on-demand and integrating with SLURM to schedule and manage workloads on managed HPC clusters.

5.3 Iterations, Contextual Analysis Pathways & Collaborations

Science in R&D programs is inherently iterative and collaborative. Hypotheses evolve, methods are revisited, and results are reinterpreted in light of perspectives from multiple disciplines.

In IgnivaTM, we addressed this by building a platform that allows scientists to iterate on workflows and share insights with their peers. For example, cell-type labeling in single cell transcriptomics (scRNA-seq) data analysis is an iterative and context-sensitive process. Through Aganitha DISTILLTM, scientists can process and re-process the same single-cell dataset with different cell-type annotation methods, see which method brings out the insights that can best explain the biology of interest, save, compare, and share outcomes from all iterations with peers. DISTILLTM APIs are integrated into IgnivaTM workflows.

A significant fraction of R&D analysis is highly context-dependent and not just iterative. In genomics, for example, the choice of analysis pathway depends critically on the biological question being asked and the data available to answer it. Population genomics workflows used in different instances of target or biomarker identification, mechanism understanding, or responder–non-responder stratification often diverge early due to contextual differences.

In some disease contexts, insight emerges from co-localization of GWAS signals with available pQTL data; in others, results from GWAS are analyzed together with results from available PheWAS and/or TWAS analyses. These are not interchangeable pipelines, but distinct investigative pathways that reflect different hypotheses and decision needs.

Addressing this led us to invest in LOCUSTM, a platform that brings together a comprehensive collection of genomics and multi-omics analysis pipelines organized around these investigation pathways. Integrated into agentic workflows, this allows scientists to explore, compare, and iterate across context-appropriate analyses collaboratively, rather than forcing every problem through a single predefined workflow.

Across these use cases, all Igniva™ sessions are saved, shareable, resumable, versioned, and available for audit—preserving both scientific insight and decision lineage.

6. Governance Is Not a Layer – It’s a Capability

Agentic AI with governance becomes a learning system

As agentic workflows began to scale, governance emerged as a central concern.

This was not primarily about compliance. It was about visibility: understanding where agents were helping, where they were failing, how much they were costing, and where further automation made sense.

We found that governance requires instrumentation. IgnivaTM agents generate telemetry streams that are aggregated and visualized in a dashboard.

Over time, the governance dashboard revealed a deeper opportunity: treating accumulated workflow data as institutional memory. With appropriate safeguards, this history becomes a resource for continuous improvement rather than an audit burden.

Agentic AI without governance is opaque and risky. Agentic AI with governance becomes a learning system.

7. When Agents Stop Being Tools and Start Becoming Team Members

Initially, agents were invoked on demand, much like any other tool.

As adoption increased, it became clear that this model left value on the table. The most effective agents were those that maintained continuity – tracking program context, observing decisions over time, and participating persistently in workflows.

This points toward a future where agents function less like utilities and more like full-time program members: sharing visibility into objectives, constraints, and progress. In fact, this is essential for the operating model shift we want to accomplish toward a leaner, faster, and more effective translational R&D.

Also, when agents maintain continuous visibility into program evolution, downstream artifacts such as regulatory documentation emerge naturally from the program or process context. Teams can be proactively guided to maintain compliance, and filings can be reviewed against the complete history of decisions, changes, and execution.

Realizing this vision requires additional engineering and organizational change. But the payoff is substantial: stronger formalization of how work gets done, reduced dependency on individual memory, and more consistent execution across programs.

8. Why Agentic AI Requires Specialists, Not Just LLM Access

The growing availability of powerful models has lowered the barrier to experimentation. It has not eliminated the need for specialization.

We increasingly encounter “agentic” offerings that amount to little more than existing tools wrapped with conversational interfaces. While useful, such approaches fall short in complex R&D settings.

Agentic workflows must be validated continuously against real programs, updated as science evolves, and adapted to diverse contexts. This requires sustained exposure to live work, not just model expertise.

For some organizations, this has led to accelerated partnership models: starting with proven workflows, co-developing roadmaps for embedding agents into R&D functions, and scaling from high-impact use cases.

The focus has been on building durable capability rather than accelerating deployment for its own sake.

Concluding thought: Defining the Next Operating Model for R&D

A persistent concern in AI adoption is the uneven, or “jagged, nature of current large language model capabilities, which can make it difficult for scientists to place unqualified trust in autonomous systems. At the same time, even within these constraints, the value Agentic AI can deliver today, when applied thoughtfully within real workflows, is substantial.

This tension is best resolved not by waiting for perfect models, but by designing operating models that account for limitations while compounding strengths.

Agentic AI will not replace scientists. What it will replace are fragile, implicit, and non-scalable ways of working, where critical knowledge lives in individuals rather than systems, and where learning dissipates at the end of each program.

For organizations navigating the complexity of translational R&D, the question is no longer whether Agentic AI will matter, but how it should be applied responsibly and effectively.If you are exploring how to shift toward a leaner, decision-focused operating model – one that scales expertise, preserves institutional memory, and remains grounded in real programs – we hope this write-up was useful. We would be glad to understand your context and discuss how IgnivaTM supports teams getting started with this operating model.